The Behavior Rating Inventory of Executive Function (BRIEF) [Roth, 2014] is an executive functioning scale widely used among clinical psychologists. The BRIEF is recommended for use in psychological disorders, such as attention deficit hyperactivity disorder (ADHD) and autism spectrum disorder (ASD), and has also been found to show changes in a variety of medical conditions, including traumatic brain injury [Chapman, 2010] and Alzheimer’s disease [Rabin, 2006]. Although it has been featured in over 400 peer-reviewed papers and used in multiple countries [Roth, 2015], to our knowledge, the BRIEF has not yet been validated in Russia in general nor in previously institutionalized Russian adolescents and adults specifically. Furthermore, the producers of the version of the BRIEF studied here — the BRIEF2 for ages 5–18 — state that it has only been officially normed within English-speaking/reading samples and standardized based on USA census statistics [Gioia, 2015]. However, translated versions exist and have been studied outside the USA [Fournet, 2015]. The current study assessed the performance of the BRIEF2 Self-Report Form validity scales when the form was translated to Russian and administered to Russian adolescents and adults who were either previously institutionalized or raised in biological families.

Many efforts to examine the effectiveness of the BRIEF in languages other than English and countries other than the USA have involved analyzing its factor structure. For example, the parent and teacher forms of a French version of the BRIEF were found to be reliable and to have a good model fit for two- or three-factor models comprised of the individual subscales [Fournet, 2015]. A Dutch translation of the BRIEF was characterized by high internal consistency, high test-retest stability, and a factor structure similar to U.S. participants [Huizinga, 2011]. Some articles on BRIEF translations do not mention the validity scales at all, and others do not analyze their performance in depth. For example, an article on the performance and factor structure of the BRIEF in a Spanish clinical sample mentioned excluding participants who were flagged by the Negativity or Inconsistency scales but did not report the percentage of participants excluded or compare performance on these scales to USA norms [Fernández, 2014]. In the current study, we checked the performance of the validity scales, even though this method is not common in the literature on BRIEF translations. This decision was because the validity scales would potentially be used in future work to exclude participants who answer atypically, and we wanted to check whether such exclusions could be made using U.S. thresholds.

Extensive testing of translated measures is also important because cultural bias in psychological testing is a major ethical concern for clinicians [Reynolds, 2012] and researchers. For instance, mean scores on assessments such as IQ tests tend to differ among minority groups [Reynolds, 2012], making the cultural validity of psychological assessments a topic of heated debate. Besides linguistic equivalence in translations, other factors can vary across cultures, such as equivalence in constructs measured, familiarity with the type of assessment, and cultural relevance of measures [Byrne, 2016]. A majority of psychological research has been conducted in Western Industrialized Rich Democracies; therefore, the validity of assessments in diverse cultures is a concern [Leong, 2019]. Additionally, many cross-cultural instrument adaptation studies have relied on factor analysis and underutilized other strategies [Arafat, 2016]. To further the goal of testing assessment instruments thoroughly before using them in new cultures, we aimed to test the BRIEF2 Self Report Form in Russian and previously institutionalized samples, starting here with its validity scales.

According to the BRIEF2 manual, rater characteristics such as parent education level and race/ethnicity did not contribute meaningfully to BRIEF self-report standardized scores [Gioia, 2015]. For example, parent education accounted for less than 3% of the variability in the self-report data, and race/ethnicity was not significantly related to BRIEF2 self-report scores. Therefore, some factors that may vary cross-culturally, such as education and race/ethnicity, might not contribute substantially to differences in BRIEF performance. Nonetheless, it is important to test performance of the BRIEF before using it extensively in new languages and countries.

The standardization sample used for the BRIEF2 manual included participants with no history of special education, psychotropic medication usage, or neurological disorders (such as ADHD or ASD), with 803 participants who completed the self-report from [Gioia, 2015]. The current study, which was part of a larger project on institutionalization, did not screen for type of education or medication use, so our samples might not match the standardization samples on those aspects. Unfortunately, the BRIEF2 has not been standardized in a previously institutionalized US sample, which would have been interesting to compare to our Russian institutionalized sample. The manual includes general clinical samples, as well as samples prone to deficits in executive functioning, including ADHD and ASD.

Institutional care, defined here as care in government institutions without a family structure (e.g., orphanages or baby homes), in Russia, is often characterized by psychosocial deprivation, frequent changes in caregivers, and the deprivation of close individual contact between the child and caregiver [Lantrip, 2016; Tirella, 2008]. Children in institutions may have psychosocial difficulties associated with the absence of personal space due to living in the same room as multiple other children, a low level of adaptive care, and stigmatization from peers with whom they attend school [Muhamedrahimov, 2015; Tsvetkova, 2016]. Russian institutions disproportionately contain children with disabilities, although typically developing infants and children are also placed in institutions [Human Rights Watch, 2014]. U.S. institutions are often termed “group homes,” which house between 7 and 12 children, or “residential care.” These institutions primarily house those who need services such as therapy and medical care for severe behavioral issues or mental disorders [Child Welfare Information; Wiltz, 2018] but also contain typical children awaiting foster care placement. In both the U.S. and Russia, institutions are highly structured [Lantrip, 2016; Tirella, 2008; Child Welfare Information]. Children in U.S. and Russian institutional care may face some of the same struggles, such as trauma from changes in guardians [Muhamedrahimov, 2015; Tsvetkova, 2016]. One difference is that although institutional care is improving in quality and becoming less common in Russia, it is still more common than in the U.S. For instance, approximately 19% of Russian children without parental care are placed in institutions [Posarac, 2021], versus around 10% of children without parental care in the U.S. [Children’s Bureau. The, 2020]. This 19% comprises a large number of individuals because the rate of public care is high in the Russian Federation (1673 in 100,000 children as of 2021) [Posarac, 2021].

Executive Function Assessment

Executive function assessments are important for clinicians because executive functioning is linked to academic achievement [Ahmed, 2019], health-related quality of life [Brown, 2015], language ability [Gooch, 2016], and other major life outcomes. Executive functioning is also implicated in a range of disorders, including ADHD [Martel, 2007], depression [Watkins, 2002], and schizophrenia [Chey, 2002], among many others, as well as early life experiences. Specifically, a history of institutional care has been shown to be related to deficits in executive function, as measured with cognitive performance tasks and neuroimaging [Lamm, 2018; McDermott, 2012; Merz, 2016]. The number of individuals with a history of such care is large (see above); therefore, executive functioning assessments for clinicians working with previously institutionalized individuals are important.

The BRIEF2 is one such assessment that has practical advantages over some executive function assessments commonly used in research or clinical practice. It contains seven subscales of executive functioning in just one 55-item questionnaire: Inhibit, Self-Monitoring, Shifting, Emotional Control, Task Completion, Working Memory, and Planning and Organization [Gioia, 2015]. Therefore, it is comprehensive and quick. It does not require expensive neuroimaging or computing equipment. It also does not involve multiple tasks with different sets of instructions to assess different aspects of executive functioning, which might be difficult for those with attentional deficits to complete. If demonstrated to be valid in Russia and in previously institutionalized samples, it would be a useful tool for clinical evaluation and research in Russia in general and in particular for studying individuals with a history of institutionalization. The current study examined the validity scales built into the BRIEF2 Self-Report form designed to detect atypical, inconsistent, or overly negative responses.

Method

Participants. Recruitment took place in major Russian cities. Those at least 18 years of age gave their written informed consent on a consent form approved by the Ethical Committee of the St. Petersburg State University #02-199 on May 3, 2017. Those under 18 had their caregivers sign consent forms. Participants were compensated with 1000 rubles, which came out to an hourly rate approximately equal to the average local hourly wage at the start of the study in 2017. They were primarily recruited through orphanages, social assistance centers, and secondary educational institutions (e.g., lyceums, technical schools, colleges), and some were self-referred via Internet ads. Participants were included in the institutional care (IC) group if institutional records or the participant reported at least 6 months of institutionalization on the initial study screening. The biological family care (BFC) group was raised exclusively in their biological families. These participants were recruited to fall within a similar age range and educational level as the IC group. Participants were native Russian speakers.

Participants were adolescents and adults who took part in a larger project on institutionalization outcomes (n=677). Of these, 654 completed the BRIEF2 Self Report Form, and 636 completed a medical questionnaire. After excluding four participants who selected multiple answers on some BRIEF items and six who failed to complete all BRIEF items, there were 625 participants who completed both the BRIEF2 Self Report Form and the medical questionnaire. Of the 625 who completed both the BRIEF2 and the medical questionnaire, 53 were excluded because they did not select “no” on medical questions asking if they had a recent history of head trauma or neurological illness. The final sample contained 572 participants (331 female, 241 male; 315 BFC, 257 IC). Of these participants, 182 were adolescents (68 BFC, 114 IC; 103 female, 79 male; ages 15–17 years, mean age=16.38, SD=0.64), and 390 were adults (247 BFC, 143 IC; 228 female, 162 male; ages 18–38 years, mean age=22.47 years, SD=4.68). In this sample, 550 participants completed the Culture Fair IQ Test (CFIT) [Cattell, 1973]. Because IQ was not the primary focus of the current study or an exclusion criterion, we did not exclude participants who did not complete the CFIT. Participants were involved in a larger project that included additional assessments not analyzed here, including 4 EEG tasks, a handedness questionnaire, and a behavioral battery of language ability.

Because participants were recruited primarily through educational institutions with the goal of approximately matching the IC and BFC groups on age and education, we did not control our sample to make it perfectly representative of the overall Russian population. Median income for adults in our sample was greater than 30000 rubles, versus a median of 28345 rubles in the Russian Federation at the time of data collection in 2017 [Federal State Statistics] (https://eng.rosstat.gov.ru/). In our sample, 25% of participants aged 15–29 had a job. Based on Russian government statistics stating that 68.5% of individuals aged 15–72 have jobs and that only 20.2% of the employed were 15–29 years, we estimate that approximately 13% of the 15- to 29-year-old general population was employed at the time of data collection [Federal State Statistics]. In our sample, employment rates may have been higher because we focused recruitment on secondary educational institutions with a high percentage of students from orphanages (such as technical schools). Students from these types of institutions may have been more likely to have additional earnings in comparison with full-time students of bachelor’s degree-granting universities or individuals with less education. The IC and BFC groups were not matched on all income-related variables. For example, satisfaction with income was lower in the IC group (χ2(1)=30.945, p<.001), as was employment (χ2(1)=37.127, p<.001).

Assessments

Culture Fair IQ Test (CFIT). The Culture Fair Intelligence Test (CFIT; Scale 2; Form B [Cattell, 1973]) was used to assess non-verbal intelligence (IQ). IQ data from this study did not fit

a normal distribution and, therefore, could not be used to calculate standardized scores. Instead, IQ scores were calculated using the Cattell Culture Fair IQ Key standard scores for Form A, Scale 2 based on both a USA sample and a UK sample. The USA scoring most closely gave the data a normal distribution, so we used the USA scoring guide.

BRIEF2 Self-Report Form. The BRIEF2 Self-Report Form is a 55-item questionnaire originally designed for ages 11–18. It takes approximately 10 minutes to complete. Each item on the BRIEF2 describes a behavior that represents a problem with executive functioning and asks the participant to rate whether they never, sometimes, or often have the problem [Gioia, 2015]. The item scores are then summed into composite scores (1 — never,

2 — sometimes, 3 — often) within each of 7 subscales (Working memory, Inhibit, Self-Monitor, Shift, Emotional Control, Task Completion, Plan/Organize). Therefore, higher scores on the BRIEF2 indicate worse executive functioning. For the current study, all items were translated to Russian and then translated a second time into English (a commonly accepted method called back-translation [Beaton, 2000]) to check the accuracy of the first translation. At the time of the current analyses, accuracy of the Russian translations was checked once again by a native Russian speaker with English fluency. To be able to analyze adult and adolescent data together in analyses that are part of our larger project on institutionalization, we used the same version of the questionnaire (the BRIEF2 Self-Report) for all participants (adults and adolescents), and in the current paper checked whether the validity scales performed differently in the two age groups. Many items on the BRIEF2 for ages 11–18 and the Adult (BRIEF-A [Roth, 2005]) Self-Report forms are identical or very similar, suggesting that the 11- to 18-year-old version might also accurately assess young adults.

Validity Scales. The BRIEF2 Self-Report contains three validity indicators designed to flag individuals with questionable responses: Infrequency, Inconsistency, and Negativity scales.

Infrequency.The Infrequency scale contains three questions that are not part of the executive function subscales and are highly unusual to endorse, even for severely cognitively impaired participants according to the professional manual for the BRIEF2 (e.g., endorsing that they forget their own name) [Gioia, 2015]. Thus, these items are designed to detect highly atypical answers and may reflect falsehoods or extreme impairments.

Inconsistency.The Inconsistency scale identifies inconsistency between answers to similar questions. Discrepancy scores are computed for pairs of similar items to check for response inconsistencies.

Negativity.The Negativity scale contains items that are part of the executive functioning scales, and it identifies when a participant gives an abnormally large number of “often” responses on negative items (e.g., endorsing that the participant often talks at the wrong time).

Results

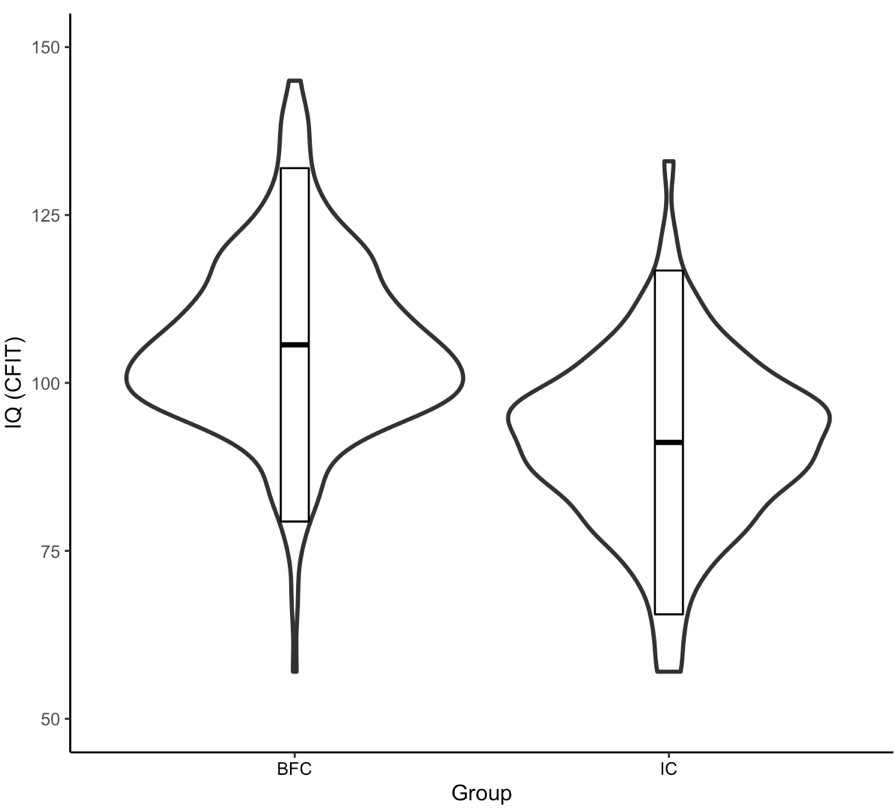

Demographic Analysis. Although the BRIEF2 manual explains that validity measures have been tested in a typical standardization sample as well as a variety of clinical samples, including cognitive impairment, we checked IQ scores on the CFIT for the current sample to make sure that the majority of participants did not have very low IQ. The mean IQ score was 99.31 (MeanBFC=105.67, SDBFC=13.15; MeanIC=91.14, SDIC=12.80; see Figure 1). Thirteen participants had scores lower than 70 (2.3%; 5 with 57, 3 with 62, 5 with 66), which is a common threshold for diagnosing intellectual disability [Fernell, 2020]. They were retained in the current analyses due to their small number and the BRIEF manufacturer’s suggestion that the validity scales work in most clinical groups and that the BRIEF works with a broad range of participants. Furthermore, only a small number of participants flagged on the validity scales had IQ below 70, so excluding them would not have changed the results by much (see Table 1 and 2 captions and Negativity Scale results below).

BRIEF Reliability. Cronbach’s alpha values were computed for our sample to compare to the BRIEF2 manual [Gioia, 2015]. Scores for our whole dataset (alpha range 0.72 to 0.83 across scales) and for the individual BFC (0.67 to 0.83) and IC (0.75 to 0.82) groups largely overlapped with the standardization (0.81 to 0.90) and atypical clinical (0.71 to 0.85) samples described in the BRIEF2 manual [Gioia, 2015].

CFIT Reliability. Reliability of the CFIT in our overall sample was checked using Cronbach’s alpha. For our whole sample, the range of Cronbach’s alpha across subtests was 0.88 to 0.91. Alpha values for the BFC (0.77 to 0.90) and IC (0.81 to 0.87) groups largely overlapped with each other. The CFIT manual does not clarify how reliability was computed for the manual, so we cannot directly compare the manual to our sample, but the manual gives a value of 0.76 for consistency over items [Cattell, 1973].

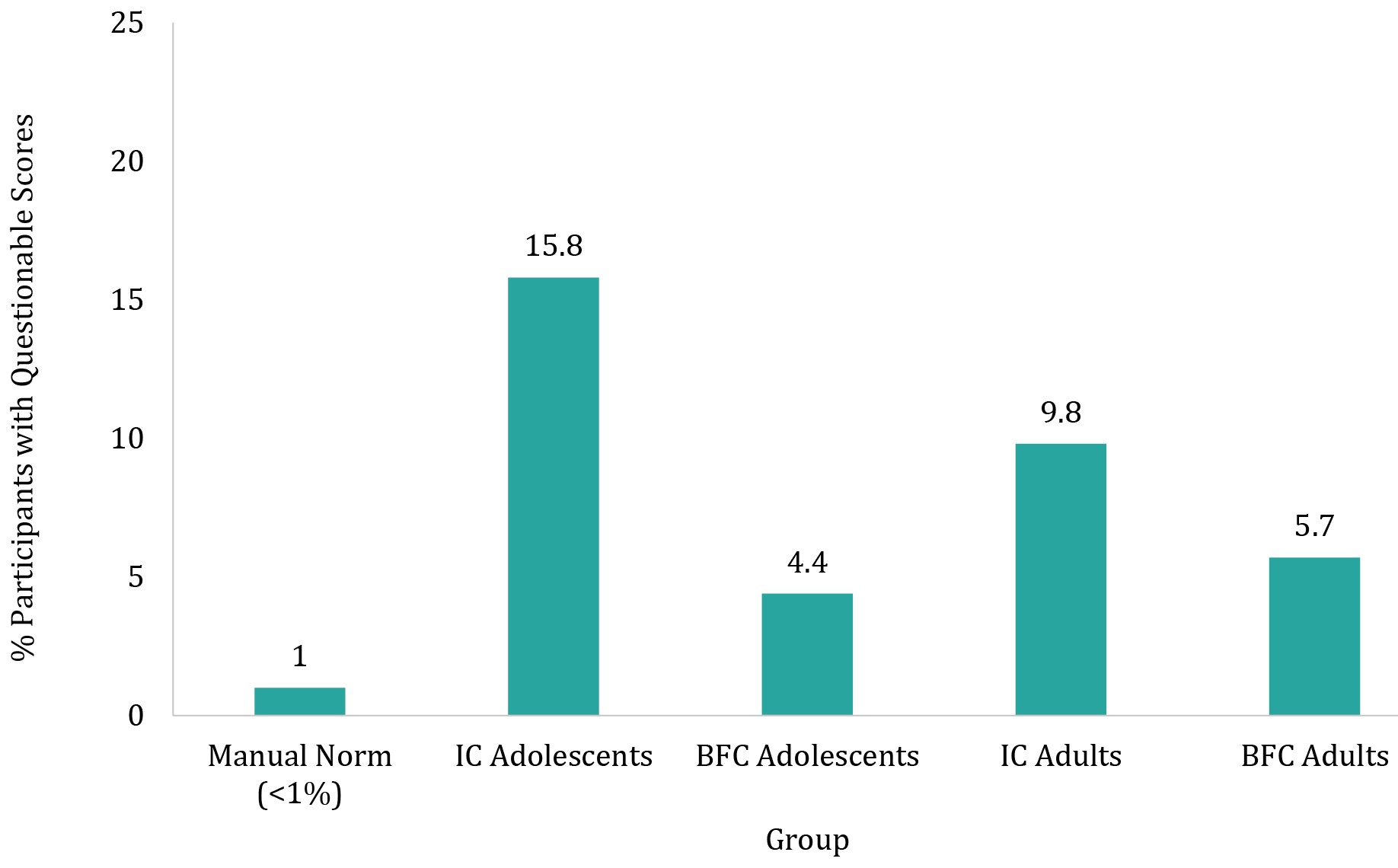

Infrequency Scale. According to the professional manual for the BRIEF2 [Gioia, 2015], questionable scores on the infrequency scale, indicated by selecting “sometimes” or “often” on at least one infrequency item, indicate a greater than 99th percentile score, even in most clinical groups and in individuals with cognitive impairment (tested in U.S. samples). However, in the current overall sample, questionable scores on this scale occurred for 8.6% of participants (see Table 1), which is significantly more often than 1% (z=18.27, p<.0001). Even for the current study subgroup with the lowest rate of questionable scores (BFC adolescents; 4.4%; see Figure 2), the rate was significantly higher than the 1% indicated by U.S. norms (z=2.82, p<.01).

Figure 1. Violin plots of IQ scores by institutionalization status.

Rectangles represent two standard deviations from the mean

Figure 2. Percentages of questionable scores on infrequency items split

by age groups and institutionalization history

Table 1

Count data and percentages of questionable scores on infrequency items split

by age groups and institutionalization history

|

|

Questionable Scores |

|

Total |

49/572=8.6%* |

|

Adolescents |

21/182=11.5% |

|

IC Adolescents |

18/114=15.8% |

|

BFC Adolescents |

3/68=4.4% |

|

Adults |

28/390=7.2% |

|

IC Adults |

14/143=9.8% |

|

BFC Adults |

14/247=5.7% |

|

IC Total |

32/257=12.5% |

|

BFC Total |

17/315=5.4% |

Notes. * — with individuals with IQ below 70 excluded, 44 participants had questionable scores on the infrequency scale. Therefore, a high proportion of low IQ individuals were flagged on this scale (4 out of 13). However, even with those who have an IQ below 70 excluded, the percentage of participants flagged on this scale in this sample remains high compared to a U.S. sample (8.2% versus <1%).

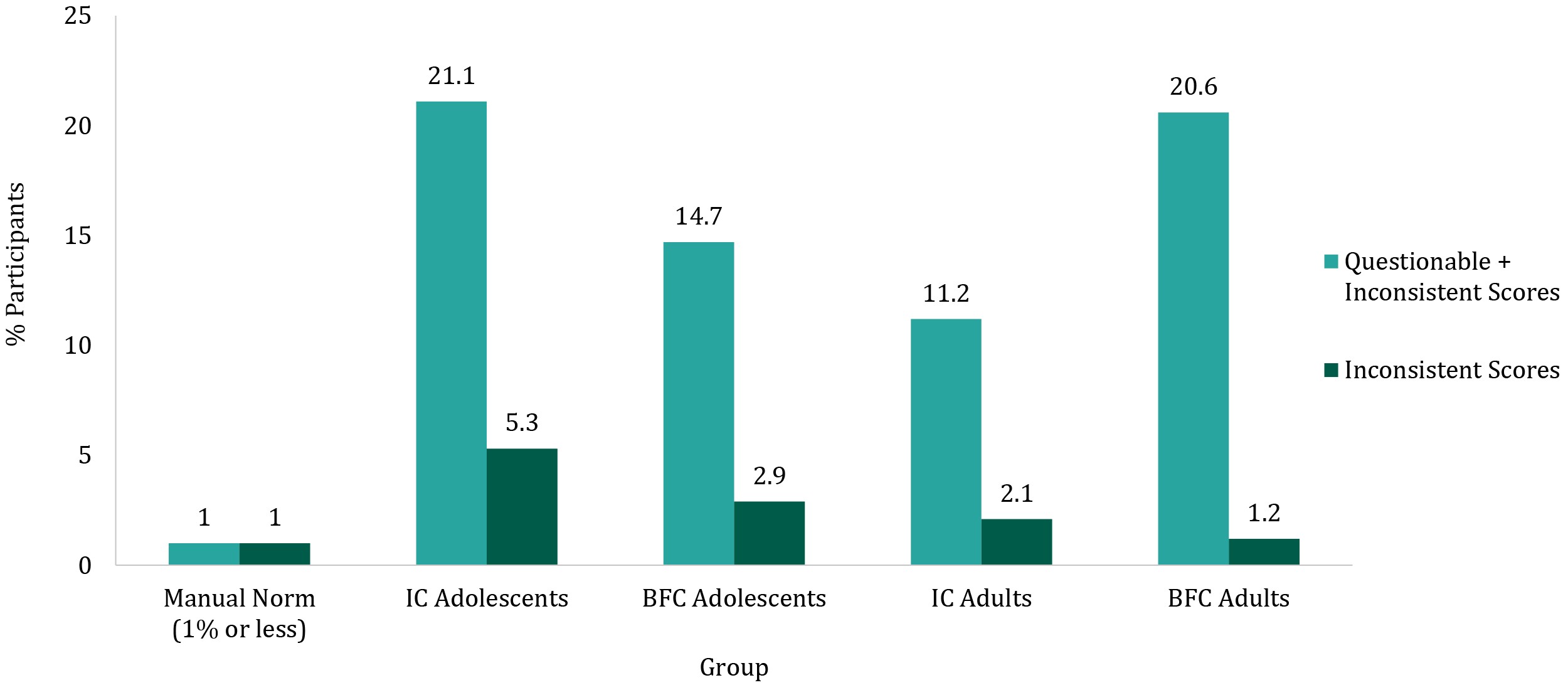

Inconsistency Scale. Inconsistency scores are computed by summing the absolute value of the differences between similar items, and the questionnaire contains 8 similar item pairs. A score less than or equal to 5 is acceptable (98th percentile or lower according to U.S. norms), 6-7 is questionable (99th percentile), and 8+ indicates inconsistent responses (>99th percentile). In the current sample, both questionable + inconsistent scores (101/572 or 17.7%) and inconsistent scores alone (14/572 or 2.4%) occurred significantly more often than 1% of the time (z=40.04, p<.0001; z=3.48, p<.001, respectively; see Table 2 and Figure 3), which is the maximum amount of questionable and inconsistent scores according to the manual normed on U.S. participants.

Negativity Scale. To compute negativity scores, the number of items with a score of “often” on 8 negativity items is counted. A total of 6 or less is considered acceptable, 7 is elevated, and 8 is highly elevated. According to the normative samples used in the BRIEF2 manual, elevated scores are considered 99th percentile, and highly elevated are above the 99th percentile (according to U.S. samples). Only one participant in the current sample scored above a 6 (see Table 3), which was significantly less than the 1% indicated as likely based on U.S. norms (z=-1.98, p<.05). The one flagged individual had an IQ above 70, so exclusions based on IQ would not have changed the results significantly.

Figure 3. Percentages of questionable + inconsistent or inconsistent only scores derived from inconsistency items, split by age groups and institutionalization history

Table 2

Count data and percentages of questionable + inconsistent or inconsistent only scores derived from inconsistency items,

split by age groups and institutionalization history

|

|

Questionable + Inconsistent Scores |

Inconsistent Scores |

|

Total |

101/572=17.7%* |

14/572=2.4%* |

|

Adolescents |

34/182=18.7% |

8/182=4.4% |

|

IC Adolescents |

24/114=21.1% |

6/114=5.3% |

|

BFC Adolescents |

10/68=14.7% |

2/68=2.9% |

|

Adults |

67/390=17.2% |

6/390=1.5% |

|

IC Adults |

16/143=11.2% |

3/143=2.1% |

|

BFC Adults |

51/247=20.6% |

3/247=1.2% |

|

IC Total |

40/257=15.7% |

9/257=3.5% |

|

BFC Total |

61/315=19.4% |

5/315=1.6 % |

Notes. * — with individuals with IQ below 70 excluded, 94 participants had questionable or inconsistent scores and 12 had inconsistent scores on the inconsistency scale. Therefore, a high proportion of low IQ individuals were flagged on this scale (7 out of 13). However, even with those who have an IQ below 70 excluded, the percentage of participants flagged on this scale in this sample remains high compared to a U.S. sample (17.5% questionable or inconsistent versus 1%; 2.2% inconsistent versus <1%).

Table 3

Count data and percentages of elevated or highly elevated + inconsistent scores on negativity items, split by age groups and institutionalization history

|

|

Scores |

|

Total Elevated |

0/572=0% |

|

Total Highly Elevated |

1/572=0.2% |

|

Adolescents Highly Elevated |

1/182=0.5% |

|

IC Adolescents |

1/114=0.9% |

|

BFC Adolescents |

0/68=0% |

|

Adults Highly Elevated |

0/390=0% |

|

IC Adults |

0/143=0% |

|

BFC Adults |

0/247=0% |

|

IC Total Highly Elevated |

1/257=0.4% |

|

BFC Total Highly Elevated |

0/315=0% |

Relations between Validity Scales, Covariates, and BRIEF. Because percentages of individuals with questionable scores on the Infrequency and Inconsistency scales were higher than indicated in the BRIEF2 Professional Handbook with norms created within the United States, despite excluding those with head trauma or neurological illness and despite not explicitly recruiting a clinical population, further exploration was warranted. The negativity scale is not analyzed in this section because only one participant had an elevated negativity score.

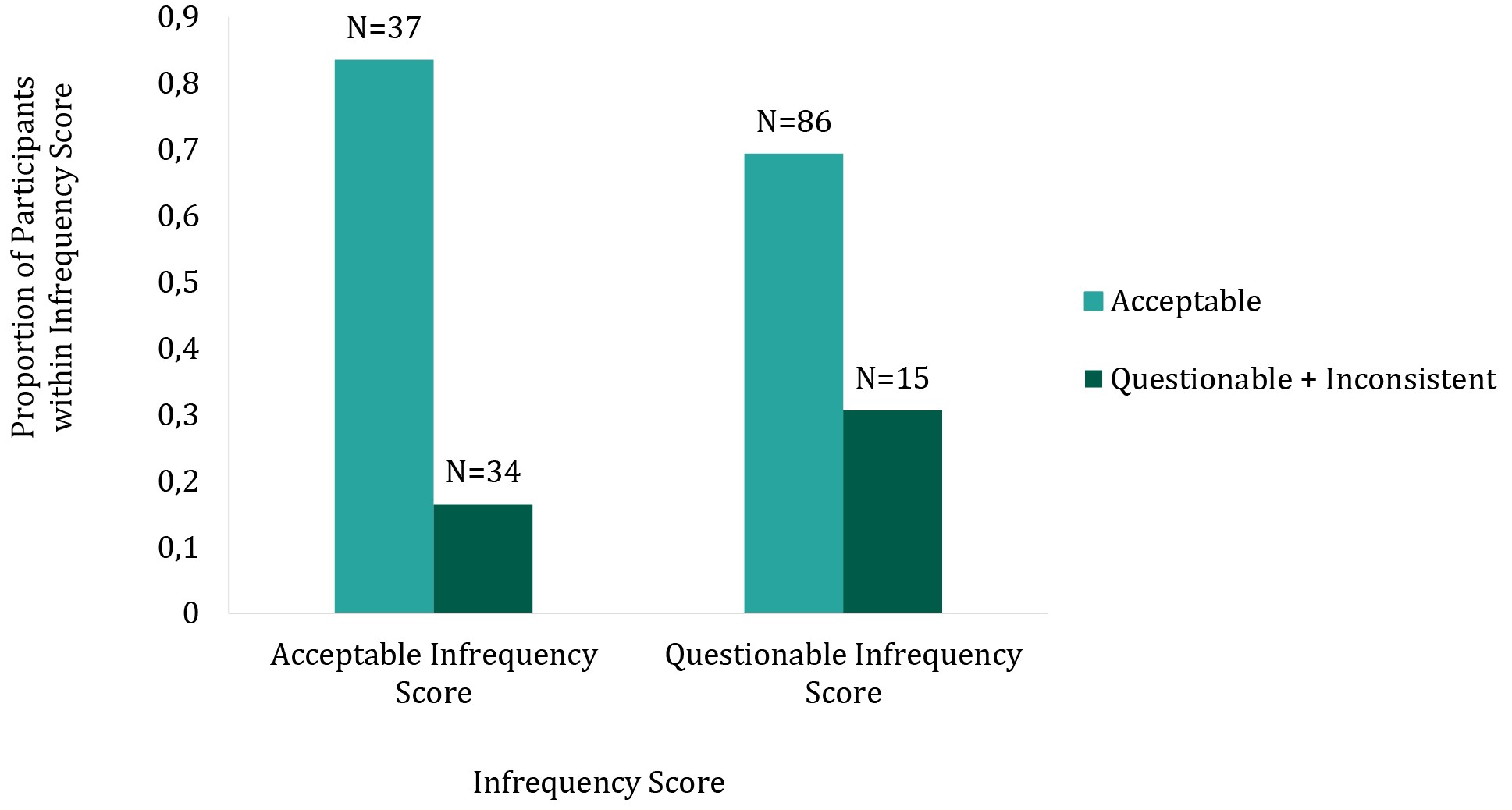

Infrequency and Inconsistency. If the reason for the high number of questionable responses on the infrequency scale was that some participants selected answers arbitrarily or inaccurately, it seemed likely that participants with questionable responses on the infrequency scale participants would also be prone to answering similar questions inconsistently and be flagged by the inconsistency scale. Although a majority of participants with questionable scores on the infrequency scale had acceptable scores on the inconsistency scale (34/49; see Figure 4), a higher proportion of individuals with questionable infrequency scores had questionable or inconsistent answers on the inconsistency scale than those with acceptable infrequency scores (χ2(1,572)=6.19, p<.05).

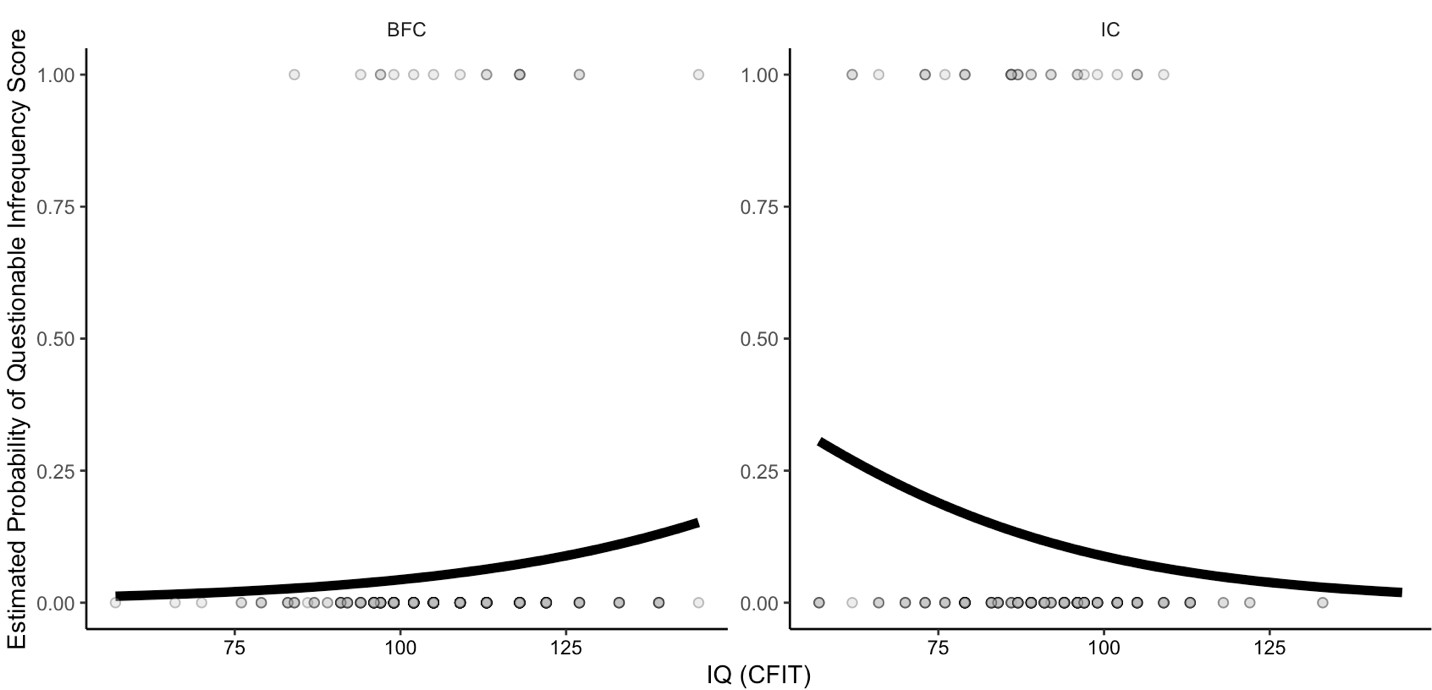

IQ and Validity Scales. For the Infrequency and Inconsistency scales, a logistic generalized linear model testing the effects of IQ, Group (IC, BFC), Age Group (Adult, Adolescent), and the IQ x Group interaction on whether scores were questionable was run using the glm function in R with a binomial distribution specified.

Figure 4. Proportions of participants with acceptable or questionable infrequency scores and those with acceptable or questionable + inconsistent inconsistency scores, plus count data

Infrequency. The IQ x Group interaction had a statistically significant effect on the probability of getting a questionable infrequency score (χ2(1)=7.62, p<.01; see Figure 5). Follow-up tests indicated that in the BFC group, IQ scores were not significantly related to the probability of getting a questionable infrequency score (z=1.70, p=.09), and in the IC group, IQ scores were negatively related to the probability of getting a questionable infrequency score (z=-2.30, p<.05). The main effect of Institutionalization was statistically significant (χ2(1)=9.14, p<.01), such that those with a history of institutionalization were more likely to have questionable infrequency answers than those raised in biological families. The Age effect was not statistically significant.

Inconsistency. Questionable and inconsistent scores were combined into one “non-acceptable” category. There were no statistically significant effects of IQ, Group (BFC, IC), IQ x Group, or Age Group (adolescent, adult) on the probability of getting non-acceptable answers on the inconsistency scale.

BRIEF Performance and Validity Scales. A linear regression tested the effects of validity (Acceptable or Questionable) on each BRIEF subscale, controlling for Group (IC, BFC), Age Group (Adolescent, Adult), and Gender (male, female). For all subscales of the BRIEF and for both Infrequency and Inconsistency subscales, questionable answers were associated with worse executive function (see Table 4).

Figure 5. IQ by predicted infrequency probability in each group

Notes. Black lines represent the GLM-predicted probability of having a questionable infrequency score. Dots represent the raw data, with 0=acceptable and 1=questionable. Overlapping dots are darker.

Table 4

F-values, p-values, and directions of effects for linear models testing effects

of questionable Infrequency and Inconsistency scores on each BRIEF subscale

|

BRIEF Subscale |

Infrequency |

Infrequency |

Inconsistency |

Inconsistency |

|

Inhibit |

17.19 |

<.0001 |

17.68 |

<.0001 |

|

Working Memory |

33.11 |

<.0001 |

42.88 |

<.0001 |

|

Shift |

9.45 |

<.01 |

18.17 |

<.0001 |

|

Plan |

20.48 |

<.0001 |

25.63 |

<.0001 |

|

Self-Monitor |

31.74 |

<.0001 |

18.06 |

<.0001 |

|

Task Completion |

12.91 |

<.001 |

48.59 |

<.0001 |

|

Emotional Control |

6.60 |

<.05 |

22.07 |

<.0001 |

Discussion

The current study compared Russian samples raised in exclusively in biological families or at least partially in institutionalized care to U.S. norms on three validity scales built into the BRIEF2 Self-Report form [Gioia, 2015]. Results indicate that for scales designed to flag highly infrequent (abnormal) or inconsistent answers, significantly more individuals were flagged in our overall sample (8.6 & 17.7%) compared to typical and clinical samples in the U.S. (~1% or less). The infrequency scale, designed to detect highly abnormal answers, was also sensitive to IQ and a history of institutionalization. Questionable answers on infrequency and inconsistency scales were also associated with worse executive functioning on all subscales of the BRIEF. Although the BRIEF2 was designed for use in individuals aged 11–18, performance on the infrequent and inconsistent validity scales did not significantly vary by age group (adolescent, adult). Results point to possible cultural differences in responding between the U.S. and Russia and highlight the need for culturally sensitive validity checks before translated measures are used extensively in research or clinical practice.

The high rates of questionable scores on the infrequency and inconsistency subscales and low rate of elevated scores on the negativity scale in the current study were not driven by any particular question(s). For example, the three infrequency items had similar numbers of questionable responses (26, 22, & 24 questionable responses per item). Additionally, even though our sample included previously institutionalized individuals who may be more prone to lower IQ [van IJzendoorn, 2008] and executive functioning [Lamm, 2018; McDermott, 2012; Merz, 2016] than non-institutionalized individuals, the results seem unlikely to be driven by low functioning in this sample. One reason for this conclusion is that the BRIEF2 validity scales were tested in the U.S. in clinical samples and those with cognitive impairment, and they still showed low levels of questionable answers. Additionally, mean IQ in this sample (99.31) was close to the U.S. average (100). Furthermore, even when considering only the intended age group (our adolescent group) and participants raised in biological families, the Russian sample had more questionable answers on the infrequency scale and inconsistency scales than U.S. norms. Lastly, although several of our 13 participants with IQ below 70 were flagged on the inconsistency and infrequency scales, this was a small percentage of our total participants. Thus, excluding them would still have left us with higher numbers of questionable responses on the infrequency and inconsistency scales. The questionable scores, therefore, do not seem to be driven solely by impaired intelligence (although questionable scores in the IC group were associated with lower IQ) and are likely to be elevated, at least in part, for another reason.

The relatively high number of individuals flagged on infrequency and inconsistency validity scales could be due in part to cross-cultural differences in assessments, specifically unfamiliarity with the type of testing [Byrne, 2016]. Although the Russian education system uses multiple choice tests, psychological screening in Russia has historically been less common compared to western countries [Balachova, 2001; Grigorenko, 1998]. Therefore, participants may not have been as comfortable with self-reflection and self-report questionnaires. The differences found here may also be due to unidentified cultural differences between Russia and the U.S.

Another possibility is that the high infrequency and inconsistency scores might reflect a subset of individuals who answered quickly or carelessly. Although participants got an hour break halfway through testing, the larger study visit took approximately five hours. Therefore, some participants may have answered indiscriminately to rush through testing or made errors if they became fatigued during testing. Furthermore, we saw strong relations between two of the validity scales (inconsistency and infrequency) and the BRIEF subscales that measure executive functioning, such that those with lower executive functioning were more likely to be flagged on the validity scales. (Although note that it remains unclear how much the BRIEF executive function scores can be trusted in individuals flagged by the validity scales.) Careless answering or attentional difficulties could explain the high percentage of participants flagged for inconsistent answers. Additionally, since only one response of “sometimes” or “often” on any of the three infrequency items was required for a participant to be flagged on the infrequency scale, indiscriminate answering would likely flag more than the U.S. norm of 1% of participants on this scale. However, most participants who were flagged on one of these validity scales were not flagged on the other (only 15/572 were flagged on both), suggesting that

a majority of questionable scores may not have been driven by one set of participants choosing answers at random.

Low engagement on the BRIEF in some participants could also partially explain why the negativity scale flagged no participants in the current study as “elevated” and just one (~0.1%) as “highly elevated”. In order to be flagged on the negativity scale, a participant had to answer “often” on 7-8 out of 8 items on the negativity scale [Gioia, 2015]. If a subset of participants was indiscriminately providing a range of answers, they would be unlikely to answer “often” on so many negativity items and could slightly lower the negativity rate. However, it remains unclear why such a small number (1/572) was flagged on this subscale in our sample or how meaningfully different this is from the 1% in U.S. norms, given that reaching 1% would have required only 4-5 more participants to be flagged in our sample.

A limitation of the current study is that we cannot conclusively determine the reason(s) for differences in validity between our sample and U.S. norms, so cultural differences would need to be explored in future research. Additionally, due to a focus on recruiting individuals with a history of institutionalization, our sample did not match the larger population of the Russian Federation on all demographic measures. An additional future direction could be to norm validity scales in larger Russian samples of neurotypical and clinical participants, more similar to the U.S. BRIEF2 scoring manual [Gioia, 2015]. Future work could apply the same exclusion criteria as U.S. standardization samples, such as psychotropic medication use. Researchers could also directly compare Russian and U.S. samples in the same study and could try a study design with a smaller number of additional measures to reduce the potential for rushing or fatigue effects. The reason(s) that we saw

a Group x IQ interaction on the infrequency scale also remains unclear and should be explored further.

Conclusions

The primary goal of this report was to evaluate the usability of the BRIEF2 Self-Report Form scales in a Russian sample. Cultural validity is important when a scale is used in new populations [Byrne, 2016; Leong, 2019; Reynolds, 2012], and here we demonstrated that the BRIEF2 validity scales may not perform the same in Russian and U.S. samples. The infrequency scale also does not perform the same in individuals raised in biological families versus those raised at least partially in institutions. Future work will evaluate the BRIEF2 further, using analyses such as item response theory, confirmatory factor analysis [Fournet, 2015], and checking for correlations between the BRIEF2 scores and neural measures of executive functioning using EEG. Until results are clearer, researchers and clinicians should use translations of this scale in Russian samples with caution.